Accurate 2D Object Detection and Tracking Across Video Frames

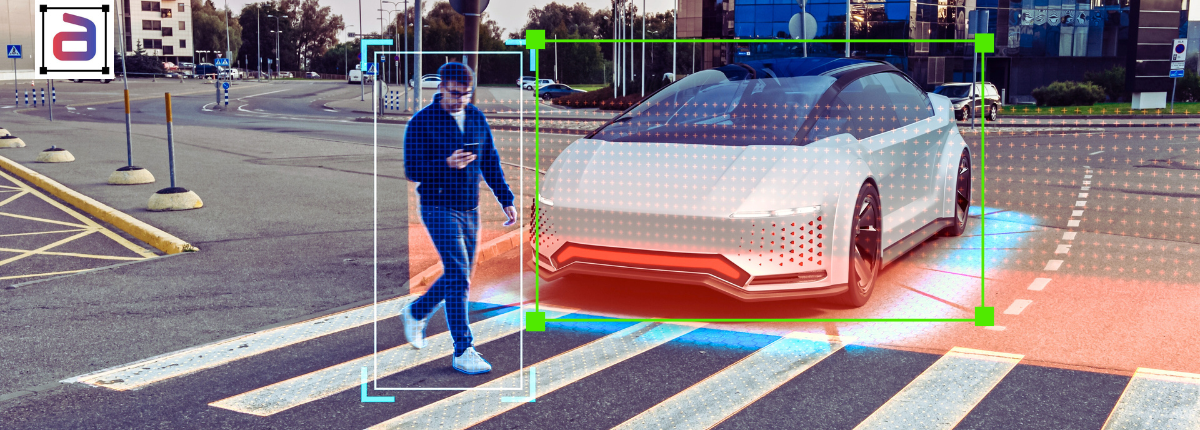

Dynamic video environments demand annotations that remain consistent over time. Object detection and tracking accuracy improve significantly when spatial boundaries are labeled carefully across every frame.

Video Annotation Built for Temporal Consistency and Enterprise AI Using 2D Bounding Boxes

Video-based perception models need consistent accuracy across time, motion, and changing environments. In these use cases, 2D video bounding boxes are a core part of video annotation because they help AI learn how objects look, move, overlap, and interact across a full sequence. Each frame must maintain the correct position and size while remaining consistent from one frame to the next. Trained video annotators follow clear temporal rules to handle motion blur, camera shake, scale changes, partial visibility, and crowded scenes.

With more than 20 years of outsourcing and data annotation experience and a secure global delivery model, Annotera provides scalable and cost-efficient workflows for autonomous systems, surveillance, retail analytics, robotics, sports intelligence, and smart infrastructure. This approach creates stable training data, reducing model drift and improving tracking performance. As a result, teams deploy production-grade video AI systems faster and with more confidence.

ServicesStructured Video Annotation Capabilities using 2D Bounding Boxes

Designed to support long-form and high-frame-rate video workloads, 2d video bounding boxes enable consistent object localization across time while preserving spatial accuracy in complex real-world scenes.

Frame-Level Object Labeling

Objects are annotated in every frame to maintain precise spatial positioning throughout the video sequence.

Motion-Aware Box Tracking

Bounding boxes follow object movement smoothly, supporting reliable trajectory and tracking model training.

Occlusion-Aware Annotation

Partially visible or temporarily hidden objects are labeled using consistent visibility and truncation rules.

Crowded Scene

Handling

Handling

Overlapping and closely packed objects are annotated clearly in traffic, retail, and public environments.

Multi-Class Video Annotation

Multiple object categories are labeled within the same sequence without class confusion or overlap errors.

High-Resolution Video Support

Bounding boxes maintain precision across HD and 4K video inputs used in enterprise AI programs.

Temporal Drift

Correction

Correction

Frame-to-frame validation prevents jitter, misalignment, and bounding box instability.

Quality-Controlled Video Outputs

Annotation datasets undergo multi-stage review to ensure accuracy, continuity, and consistency.

FeaturesCapabilities That Strengthen Video AI Training

Built on mature delivery processes, 2d video bounding boxes support reliable object detection and tracking across diverse video conditions and enterprise use cases.

Frame-Level Precision

Bounding boxes remain tight and accurate across changing object positions and scales.

Temporal Consistency Validation

Specialized quality checks ensure stability and continuity throughout video sequences.

Cross-Industry Video Expertise

Annotation teams support video AI use cases spanning mobility, security, retail, and robotics.

Scalable Video Operations

Large volumes of video data are processed efficiently without compromising annotation quality.

Why Choose Us? Enterprise Delivery for Video Annotation Programs

Proven operational maturity and domain expertise ensure dependable video datasets aligned with performance, security, and scalability requirements. In large-scale AI initiatives, 2d video bounding boxes are delivered with a focus on consistency, accuracy, and production readiness.

Extensive Video Annotation Experience

Decades of experience supporting enterprise video-based AI initiatives across industries.

Flexible Engagement Models

Cost-efficient pricing structures support pilots, expansions, and long-term programs.

Enterprise Security Standards

SOC-aligned environments protect sensitive video content and proprietary data.

Custom Annotation Frameworks

Project-specific rules align with camera setups, object classes, and AI objectives.

Rigorous Quality Governance

Multi-layer validation ensures frame-level accuracy and temporal stability.

Scalable Annotation Workforce

Trained teams enable rapid ramp-up for high-volume video annotation needs.

Connect with an Expert

Frequently Asked QuestionsGot Questions? We’ve Got Answers for You

Here are answers to common questions about text annotation, accuracy, and outsourcing to help businesses scale their NLP projects effectively.

What are 2D video bounding boxes?

2D video bounding boxes refer to rectangular annotations applied to objects across every frame of a video sequence to define their spatial extent over time. Unlike static annotations, these boxes must adapt continuously as objects move, change scale, interact, or partially disappear. By preserving spatial boundaries and temporal continuity throughout the video timeline, 2D video bounding boxes enable AI systems to learn object position, motion behavior, and interaction patterns within dynamic visual environments.

How do 2D video bounding boxes differ from image bounding boxes?

Image bounding boxes label objects in isolated frames without accounting for temporal change. In contrast, 2D video bounding boxes must remain consistent across consecutive frames, handling motion, occlusion, camera movement, and perspective shifts. This requirement for frame-to-frame alignment and stability makes 2D video bounding boxes significantly more complex. Their temporal nature allows video AI systems to learn continuity and movement rather than treating each frame as an independent image.

Which industries rely on 2D video bounding boxes?

Industries that depend on reliable object detection and tracking in real-world conditions rely heavily on 2D video bounding boxes. Autonomous driving platforms use them to track vehicles and pedestrians, surveillance systems apply them for identity monitoring, and retail analytics leverage them to analyze shopper behavior. Robotics, sports analytics, manufacturing inspection, and smart city applications also depend on 2D video bounding boxes to train detection and tracking models using continuous video data.

What challenges arise during video bounding box annotation?

Why outsource 2D video bounding boxes to Annotera?

Outsourcing 2D video bounding boxes to Annotera provides access to trained video annotation specialists operating within secure, SOC-aligned delivery environments. Scalable workflows support high-volume video datasets while maintaining strict accuracy thresholds. Through structured annotation frameworks, multi-layer quality validation, and enterprise-grade governance, 2D video bounding boxes delivered by Annotera ensure production-ready datasets that support reliable, high-performance video AI systems.