Depth-Aware 3D Cuboid Annotation for Spatial Video Intelligence

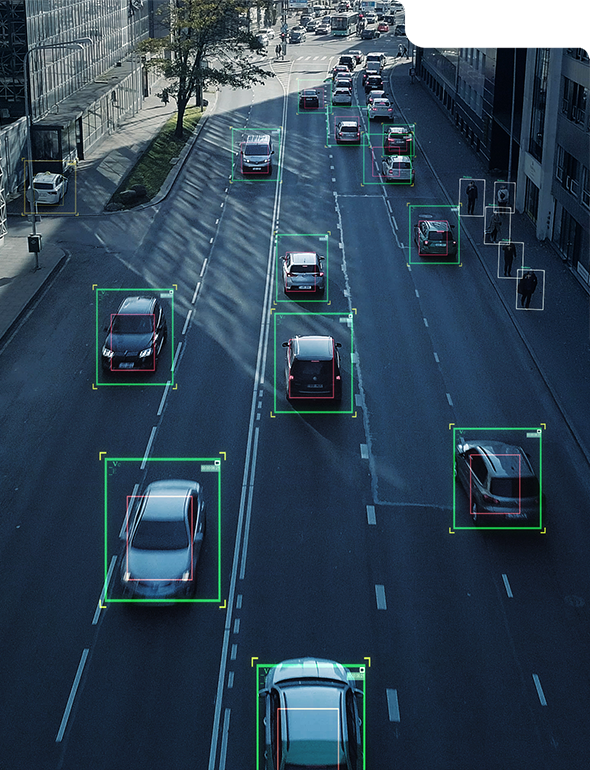

Spatial intelligence in video data improves when objects are interpreted with depth, volume, and orientation. Accurate spatial labeling enables AI systems to reason about distance, movement, and real-world interactions across time.

3D Cuboid Video Annotation Designed for Spatial Awareness and Real-World AI

Modern video perception systems need more than flat object labels. Video annotation helps AI models understand how objects occupy space and move through a scene over time. Each cuboid captures height, width, depth, and rotation. It also stays consistent across frames, which is critical for tracking and prediction.

Annotators follow clear spatial and temporal rules to handle camera motion, perspective shifts, occlusion, truncation, and fast-moving objects. With more than 20 years of outsourcing and data annotation experience and a secure, global delivery model, Annotera delivers scalable, cost-efficient workflows for autonomous driving, robotics, innovative infrastructure, warehouse automation, and advanced surveillance. The result is cleaner 3D training data that improves distance estimation, trajectory prediction, and scene understanding for production-grade video AI systems.

ServicesStructured 3D Cuboid Video Annotation Capabilities

Designed for depth-aware video intelligence, 3d cuboid video annotation supports consistent spatial labeling across frames while maintaining accurate object geometry in complex real-world scenes.

Frame-Level Cuboid Placement

Cuboids are applied consistently across every frame to preserve spatial continuity over time.

Object Depth

Modeling

Modeling

Height, width, and depth are captured to reflect real-world object dimensions.

Orientation and Rotation Mapping

Heading direction and rotational changes are annotated to support spatial reasoning.

Moving Object Cuboids

Cuboids follow object motion smoothly across frames for trajectory learning.

Occlusion and Truncation Rules

Partially visible objects are labeled using standardized spatial visibility guidelines.

Multi-Object Spatial Annotation

Multiple objects are annotated simultaneously with correct depth relationships.

Camera Perspective Alignment

Cuboid placement accounts for camera angle and viewpoint variation.

Quality-Controlled Spatial Outputs

Annotations are reviewed through multi-stage checks for spatial and temporal accuracy.

FeaturesCapabilities That Strengthen Spatial Video AI

Built on mature workflows and spatial expertise, video annotation delivers reliable training data for depth-aware video models operating in dynamic environments.

Accurate Spatial Geometry

Cuboid dimensions remain consistent across frames and viewpoints.

Temporal Stability Validation

Dedicated checks prevent cuboid drift and misalignment over time.

Depth-Aware Labeling Logic

Annotations reflect real-world distance and object volume relationships.

Scalable Video Operations

Large volumes of spatial video data are processed efficiently.

Why Choose Us? Enterprise Delivery for Spatial Video Annotation Programs

Operational maturity and domain experience ensure dependable datasets aligned with enterprise performance and security requirements. In large-scale AI initiatives, video annotation is delivered with a strong focus on spatial accuracy and temporal consistency.

Extensive Spatial Annotation Experience

Decades of experience supporting depth-aware video AI initiatives.

Flexible Engagement Models

Cost-efficient pricing supports pilots, expansions, and long-term programs.

Enterprise Security Standards

SOC-aligned environments protect sensitive video and perception data.

Custom Spatial Annotation Frameworks

Cuboid rules align with sensor setup and AI objectives.

Rigorous Quality Governance

Multi-layer validation ensures spatial and temporal reliability.

Scalable Annotation Workforce

Trained teams support rapid ramp-up for large video programs.

Connect with an Expert

Frequently Asked QuestionsGot Questions? We’ve Got Answers for You

Here are answers to common questions about text annotation, accuracy, and outsourcing to help businesses scale their NLP projects effectively.

What is 3D cuboid video annotation?

3D cuboid video annotation involves labeling objects in video using three-dimensional bounding structures that represent height, width, depth, and orientation across consecutive frames. Unlike flat annotations, this approach captures how objects occupy physical space and how their geometry changes as they move through the environment. By preserving spatial structure and temporal continuity, 3D cuboid video annotation enables AI systems to understand real-world object dimensions, relative positioning, and movement behavior within dynamic video scenes.

Why is depth important in video-based AI systems?

Depth information is essential for interpreting distance, scale, and spatial relationships between objects in a scene. Video-based AI systems rely on depth cues to estimate how far objects are, anticipate collisions, and predict trajectories over time. These capabilities are learned effectively through 3D cuboid video annotation, which provides structured spatial context that reflects real-world geometry. As a result, models trained with depth-aware annotations demonstrate improved scene understanding and more reliable decision-making in complex environments.

Which industries use 3D cuboid video annotation?

Industries that require spatial awareness and depth perception rely heavily on 3D cuboid video annotation. Autonomous driving platforms use it to understand vehicle and pedestrian positioning, while robotics and warehouse automation systems apply it for navigation and object manipulation. Smart city initiatives, logistics operations, and advanced surveillance systems also leverage 3D cuboid video annotation to train spatially aware video AI models that operate accurately in real-world conditions.

What challenges exist in spatial video annotation?

Spatial video annotation introduces challenges such as perspective shifts caused by camera angle changes, partial or full occlusion, fast-moving objects, camera motion, and ambiguity in depth perception. Maintaining consistent geometry across long video sequences further increases complexity. 3D cuboid video annotation addresses these challenges through standardized spatial rules, orientation handling, and frame-to-frame validation processes that ensure cuboids remain aligned, stable, and accurate over time.

Why outsource 3D cuboid video annotation to Annotera?

Outsourcing 3D cuboid video annotation to Annotera provides access to trained spatial annotation specialists operating within secure, SOC-aligned delivery environments. Scalable workflows support large volumes of depth-intensive video data while maintaining strict accuracy thresholds. Through domain-aware cuboid frameworks, multi-layer quality validation, and enterprise-grade governance, 3D cuboid video annotation delivered by Annotera ensures production-ready datasets that support reliable depth-aware video AI systems.